Optimizing Video Streaming Performance with a Token Pool System

In today’s digital world, performance is a key aspect that can make or break a user experience. Often, the simplest solutions prove to be the most effective in addressing even complex performance issues. This case study focuses on a practical and real-world example of how Akshay Saini, the creator of NamasteDev, tackled the problem of video streaming latency using a straightforward yet powerful solution—a token pool system.

Background

NamasteDev is an education platform where thousands of students access video content daily on various tech topics. The platform offers both premium and non-premium video content. Securing premium videos required integrating with a third-party service that provided Digital Rights Management (DRM) protection. To access these videos, users needed a token from this third-party service.

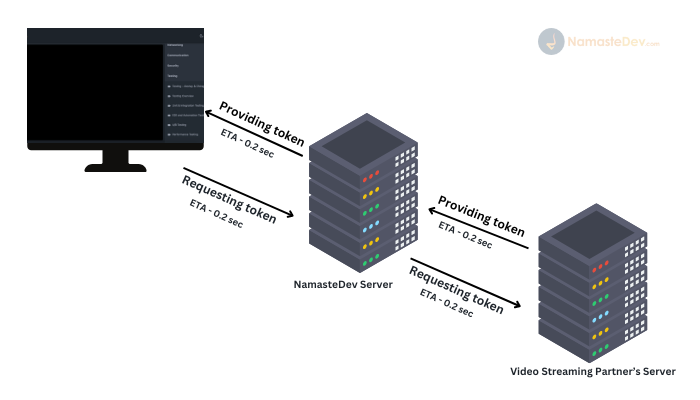

Initially, the process of obtaining a token was quite simple but inefficient in terms of performance. Here’s how it worked:

- A user would click on a video.

- An API call was made to the NamasteDev server.

- The server verified the user’s credentials and eligibility.

- For premium videos, the server requested a token from the third-party DRM provider.

- The token was forwarded to the user’s browser, enabling video playback.

This whole process, as depicted below, resulted in a delay of 0.6 seconds on average per token request due to the multiple network calls involved.

The Challenge

As the platform grew, so did the user base, which brought performance bottlenecks to light. Monitoring the API calls revealed that the latency for obtaining the video tokens was not meeting the desired speed. The average latency of 0.6 seconds per request started affecting the user experience, especially during peak times.

Akshay Saini and the team wanted to reduce this delay to create a smoother and faster video experience for NamasteDev’s users.

The Solution: Implementing a Token Pool

Akshay came up with the idea of managing an in-memory token pool to address the latency issues. Since the third-party DRM service didn’t provide an API for token pooling, the team decided to build their own solution internally.

The strategy involved:

- Initializing a token pool on server startup.

- Pre-fetching tokens for the most frequently accessed videos and storing them in-memory.

- Reusing tokens for video playback requests, significantly reducing the need for external API calls.

Token Pool Structure

The token pool was implemented as a simple JavaScript object. The team fetched 100 tokens in advance for each frequently accessed video and stored them in this pool. The structure looked something like this:

TOKEN_POOL = {

video_id_1: ['token1', 'token2', 'token3', ..., 'token100'],

video_id_2: ['token1', 'token2', 'token3', ..., 'token100'],

video_id_3: ['token1', 'token2', 'token3', ..., 'token100'],

video_id_4: ['token1', 'token2', 'token3', ..., 'token100'],

...

}

Token Retrieval Logic

When a user requested a video:

- The system first checked if there were tokens available in the

TOKEN_POOL. - If tokens existed for the requested video, one would be retrieved using

pop(), reducing the request time drastically. - If no tokens were available, a fresh token was fetched from the DRM provider, added to the pool, and returned to the user.

Here’s the core logic:

function getTokenForVideo(videoId) {

if (TOKEN_POOL[videoId] && TOKEN_POOL[videoId].length > 0) {

return TOKEN_POOL[videoId].pop();

} else {

const newToken = fetchTokenFromDRM(videoId);

TOKEN_POOL[videoId] = [newToken];

return newToken;

}

}

Continuous Token Replenishment

To ensure that the pool remained adequately stocked, a cron job was set up to refill tokens every 5 minutes. This kept a constant supply of tokens available, reducing the need to request fresh tokens in real time.

const cron = require('node-cron');

cron.schedule('*/5 * * * *', () => {

Object.keys(TOKEN_POOL).forEach(async (videoId) => {

if (TOKEN_POOL[videoId].length < 100) {

const newTokens = await fetchTokensFromDRM(videoId, 100 - TOKEN_POOL[videoId].length);

TOKEN_POOL[videoId].push(...newTokens);

}

});

});

The Outcome: Dramatic Latency Reduction

After implementing the token pool, the team observed a 93.33% decrease in API latency. The response time dropped from 0.6 seconds to an impressive 0.04 seconds. This drastic improvement resulted in a much smoother user experience, especially for students accessing premium content.

Before:

- Latency: ~0.6 seconds per token request

After:

- Latency: ~0.04 seconds per token request

Here’s a visual representation of the performance improvement:

Key Takeaways

This case study demonstrates how a simple yet effective solution can address complex performance challenges. By using an in-memory token pool, Akshay Saini and the NamasteDev team significantly reduced latency and improved the overall user experience on their platform.

Lessons Learned:

- Token pooling can be a powerful method to reduce API latency for frequently accessed resources.

- Pre-fetching and caching resources can significantly enhance performance, especially in high-traffic scenarios.

- Continuous monitoring of API performance is crucial to identify and address bottlenecks before they impact the user experience.

If you’re facing similar issues with API performance or latency, consider adopting a token pooling strategy or another pre-fetching mechanism to optimize your system’s responsiveness.

TIPWant to boost your own app’s performance? Think about caching or pooling commonly used resources. This can save precious time on recurring requests!

Conclusion

This case study serves as a practical example of how straightforward ideas can lead to massive improvements in application performance. With a clear understanding of system bottlenecks and the right solution, Akshay Saini successfully improved NamasteDev’s video streaming service, providing a more seamless experience for its users.

NOTEYou can read the original blog post by Akshay Saini on the NamasteDev platform Here.

Platform: NamasteDev

Focus: Enhancing API performance using an in-memory token pool